[ad_1]

Reinforcement learning (RL) has made tremendous progress in recent years towards addressing real-life problems – and offline RL made it even more practical. Instead of direct interactions with the environment, we can now train many algorithms from a single pre-recorded dataset. However, we lose the practical advantages in data-efficiency of offline RL when we evaluate the policies at hand.

For example, when training robotic manipulators the robot resources are usually limited, and training many policies by offline RL on a single dataset gives us a large data-efficiency advantage compared to online RL. Evaluating each policy is an expensive process, which requires interacting with the robot thousands of times. When we choose the best algorithm, hyperparameters, and a number of training steps, the problem quickly becomes intractable.

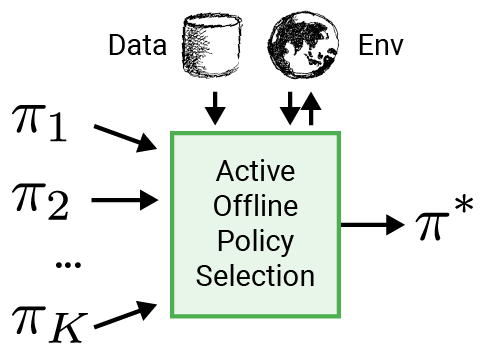

To make RL more applicable to real-world applications like robotics, we propose using an intelligent evaluation procedure to select the policy for deployment, called active offline policy selection (A-OPS). In A-OPS, we make use of the prerecorded dataset and allow limited interactions with the real environment to boost the selection quality.

To minimise interactions with the real environment, we implement three key features:

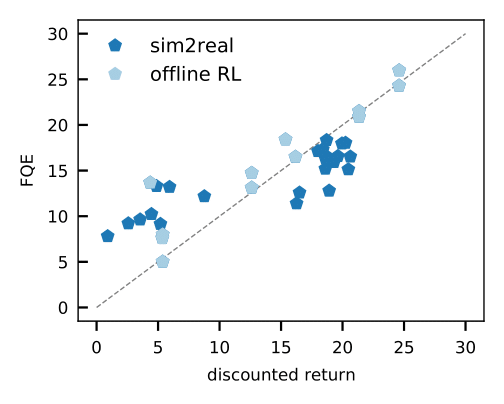

- Off-policy policy evaluation, such as fitted Q-evaluation (FQE), allows us to make an initial guess about the performance of each policy based on an offline dataset. It correlates well with the ground truth performance in many environments, including real-world robotics where it is applied for the first time.

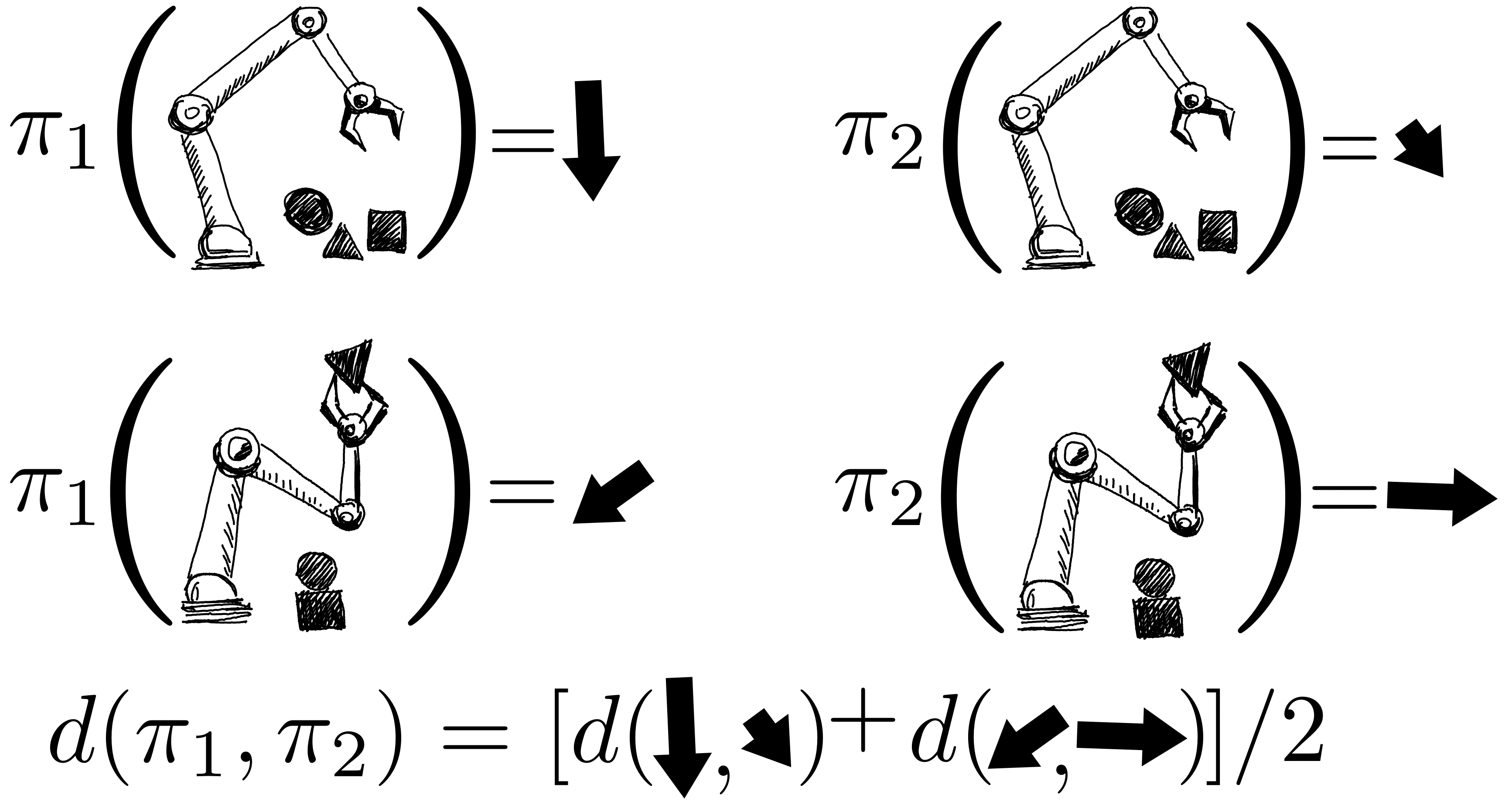

The returns of the policies are modelled jointly using a Gaussian process, where observations include FQE scores and a small number of newly collected episodic returns from the robot. After evaluating one policy, we gain knowledge about all policies because their distributions are correlated through the kernel between pairs of policies. The kernel assumes that if policies take similar actions – such as moving the robotic gripper in a similar direction – they tend to have similar returns.

- To be more data-efficient, we apply Bayesian optimisation and prioritise more promising policies to be evaluated next, namely those that have high predicted performance and large variance.

We demonstrated this procedure in a number of environments in several domains: dm-control, Atari, simulated, and real robotics. Using A-OPS reduces the regret rapidly, and with a moderate number of policy evaluations, we identify the best policy.

Our results suggest that it’s possible to make an effective offline policy selection with only a small number of environment interactions by utilising the offline data, special kernel, and Bayesian optimisation. The code for A-OPS is open-sourced and available on GitHub with an example dataset to try.

[ad_2]

Source link